Introduction

November 17, 2025 — New reports indicate an escalating shortage in DRAM, particularly high-bandwidth memory (HBM), with price spikes reverberating through the chip supply chain as AI demand surges. Analysts have noted double-digit hikes since September and warn that packaging capacity remains tight; meanwhile, Korean memory leaders are signaling significant new fab and packaging outlays. In short, AI’s pace is now gated as much by memory as by GPUs. TrendForce+1

Why it matters now

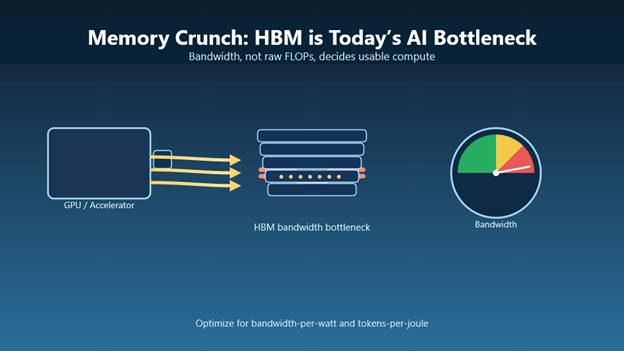

• HBM is the throttle: accelerator performance depends on bandwidth as much as cores.

• Pricing power shifts upstream: memory and advanced packaging set delivery cadence and cost curves.

• Capex wave, slow relief: megaprojects help—but on an 18–36 month horizon, not this quarter.

• System design pivot: engineering focuses on bandwidth-per-watt and tokens-per-joule, not raw FLOPs.

Call-out

Memory—not compute—is today’s defining constraint.

Business implications

For hyperscalers and cloud providers, procurement must treat HBM and advanced packaging as first-class constraints. Expect multi-year take-or-pay contracts that span wafer starts, interposers, and substrates, plus tighter delivery windows and more aggressive penalty clauses tied to memory availability. On the software side, teams will prioritize memory-efficient serving—such as quantization, sparse attention, activation checkpointing, and operator fusion—to maximize scarce bandwidth.

Enterprises should plan for uneven hardware availability through 2026, with premium pricing on HBM-rich accelerators. A pragmatic path is a hybrid deployment model: place training in regions with steadier supply and power, and push inference to edge or CPU-plus-LPDDR platforms when response latency and total cost per token are most critical. Vendors in cooling, power electronics, and interconnects benefit as operators seek higher utilization per watt from memory-bound systems.

For component makers, tight OSAT and substrate capacity will keep advanced packages as the chokepoint. This sustains firm pricing for HBM stacks and drives deeper collaboration between memory suppliers, foundries, and cloud providers to co-finance long-lead tooling.

Looking ahead

Near term (3–9 months): Memory pricing remains firm; OSATs and substrate suppliers continue to be the pinch point. Expect more long-lead contracts and co-investment deals between chipmakers and cloud platforms. TrendForce

Longer term (12–36 months): additional Korean capacity and packaging lines ease the crunch. Architectural innovation shifts to bandwidth-amplifying techniques—denser 3D DRAM, in-package optical links, and schedulers that route jobs by bandwidth class. Metrics evolve from FLOPs to “useful tokens per joule” as boards and budgets reward memory-savvy designs. 조선일보+1

The upshot

The AI race is no longer about who has more GPUs. Leadership will belong to those who secure memory at scale, optimize models for bandwidth efficiency, and align long-term supply with power and packaging. The next competitive moat is measured in gigabytes per second, not just teraFLOPs.

References – include at least one credible source.

• TrendForce — “Memory Crunch Ripples Across Chip Supply Chain,” November 17, 2025. TrendForce

• Korea JoongAng Daily — “AI demand influences memory market with rising prices, big investments,” November 17, 2025. Korea Joongang Daily

• Chosun Ilbo (English) — “Samsung Electronics, SK Hynix Eye 200 Trillion Won Investments,” November 17, 2025. 조선일보

Leave a comment