Introduction

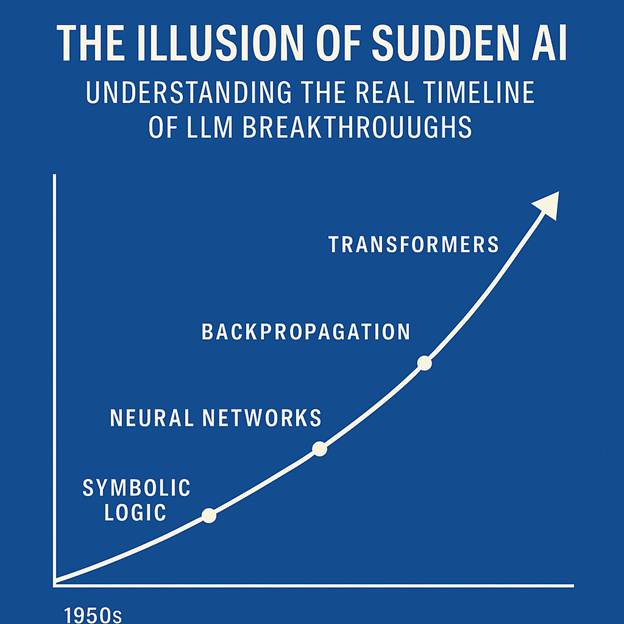

Artificial intelligence appears to have erupted into public consciousness overnight, reshaping industries and redefining how people interact with technology. Yet today’s surge in large language models is not the result of a single breakthrough. It is the cumulative product of seventy years of layered innovation—symbolic logic from the 1950s, neural networks from the 1980s, backpropagation from the 1990s, GPU acceleration from the 2000s, and the 2017 introduction of the Transformer architecture. The recent explosion in capability is the moment when these long-standing technological threads finally intersect. Understanding history allows us to interpret today’s disruption with a clearer perspective and prepare for what comes next.

Why it matters now

This matters because we are no longer witnessing incremental improvement but a platform shift. LLMs are transforming the fundamental interface of computing—from buttons and menus to natural language itself. Knowledge work, customer interactions, software development, and operational workflows are all being re-architected around conversational reasoning. For the first time, complex multi-step tasks can be expressed as instructions rather than code, enabling organizations to automate processes previously inaccessible to digital tools. The inflection point we are experiencing is the result of decades of technological compounding that has now reached critical mass.

Call-out

“AI didn’t arrive suddenly—the world finally became ready for it.”

Business implications

Organizations across every major sector will feel the impact of this shift. Knowledge-intensive industries—including finance, healthcare, law, engineering, and cybersecurity—are already redesigning workflows around AI copilots that can draft, analyze, summarize, or generate decisions in real time. Software creation accelerates when natural-language prompts replace complex syntax. Customer engagement becomes more personalized and responsive as conversational agents replace tiered support systems. Even internal decision-making changes as executives use LLMs to model scenarios, generate forecasts, or identify strategic insights. Companies that treat AI as an add-on will fall behind those that rebuild their infrastructure, processes, and culture around these emerging capabilities.

Looking ahead

In the short term, enterprises will integrate AI assistants across every customer touchpoint and operational workflow. Mid-term, industry-specific models will emerge, trained on proprietary data and optimized for compliance, accuracy, and reliability. Long-term, LLMs will become the universal interface layer for business systems, turning everyday language into a programmable asset. The convergence of better models, cheaper compute, and deeper integration with enterprise data will produce tools capable of autonomous operations, not merely assistance. In effect, the next decade will resemble the early internet era—rapid experimentation, new business categories, and structural redefinition of entire markets.

The upshot

This is not a temporary wave or a hype cycle. It is the culmination of seventy years of technological layering reaching its activation point. The suddenness we perceive is not sudden at all—it is the final click in a long chain of innovations. Businesses that understand this history will recognize that the shift toward AI-driven workflows is irreversible and that now is the moment to adapt, experiment, and build. The future belongs to organizations that learn to think, operate, and deliver value in partnership with intelligent systems.

References

- Vaswani, A. et al. “Attention Is All You Need.” Advances in Neural Information Processing Systems, 2017.

- Bengio, Y. “Learning Deep Architectures for AI.” Foundations and Trends in Machine Learning, 2009.

- Rumelhart, D., Hinton, G., Williams, R. “Learning Representations by Back-propagating Errors.” Nature, 1986.

Leave a comment