Introduction

Following new research showing that leading AI models can be compromised with a universal adversarial suffix, a central question emerges: How do we build AI systems that cannot be trivially manipulated? Today’s analysis reveals two complementary approaches—formal verification and runtime Zero Trust enforcement—each capable of addressing the structural weaknesses highlighted in the recent AI safety crisis.

The attached documents outline these two pathways: the mathematically rigorous world of Lean4 and formal methods, and the operational, real-time protection offered by TrustedPlatform’s Zero Trust enclaves. Together, they form a coherent response to the vulnerabilities exposed in today’s AI systems.

Why It Matters Now

The universal jailbreaking attack shows that current safeguards—filters, pattern blockers, heuristic guardrails—are too fragile. They fail because they rely on emergent behavior rather than provable guarantees. The documents provide two disruptive countermeasures:

- Lean4 and formal methods offer mathematical guarantees.

VentureBeat’s analysis demonstrates that Lean4 can prove every reasoning step an AI takes. Harmonic AI’s Aristotle system shows that an AI can refuse to answer unless it can produce a Lean4-verified proof (pages 3–5). This eliminates hallucinations entirely. - TrustedPlatform provides runtime protection, microsegmentation, and immutable audit.

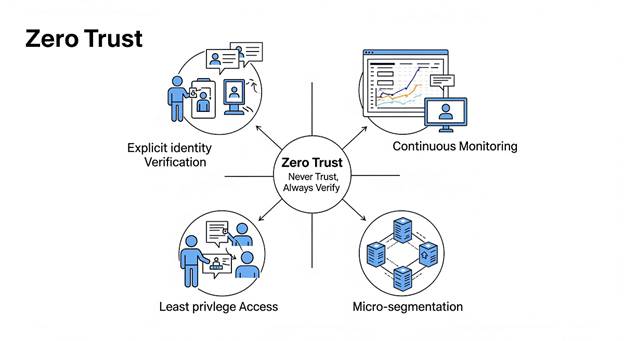

The formal analysis document shows how Zero Trust enclaves restrict AI agents so they cannot be hijacked—even if compromised prompts penetrate their reasoning layer (pages 2–4). TrustedPlatform ensures that even a misbehaving model cannot reach sensitive data or lateral system components.

Together, these two technologies address both logical correctness and operational integrity.

Call-Out

Provable reasoning + enforced containment = the first viable blueprint for trustworthy AI.

Business Implications

The attached materials make clear that organizations now face a paradigm shift. To deploy AI safely at scale:

1. AI must validate its own reasoning

Lean4 proves every inference step, transforming AI from a probabilistic guesser to a deterministic problem solver.

• Page 3: Lean4 enforces deterministic, auditable correctness.

• Page 4: Lean4 catches hallucinations as they occur.

• Page 5: AI systems like Aristotle achieve IMO-gold-medal accuracy with proofs attached.

For industries regulated by law, safety, or compliance—finance, healthcare, energy, aerospace—this is transformative.

2. AI must operate inside controlled, Zero-Trust boundaries

Formal correctness is not enough; models can still be misled.

The Formal Methods vs. TrustedPlatform document explains that TrustedPlatform adds essential runtime defenses:

• Hardware-anchored identity and microsegmentation

• Policy-enforced communication limits

• Blockchain-anchored audit of all AI agent actions

• Real-time isolation of misbehaving components

This means that even if an AI were compromised, the blast radius becomes zero.

3. Combined assurance becomes a competitive advantage

The VentureBeat analysis notes that Lean4 is becoming a key differentiator in enterprise AI development (page 7).

Similarly, TrustedPlatform’s enforcement creates provable operational compliance.

Enterprises that adopt both create AI systems that can:

• Demonstrate correctness

• Prove adherence to rules

• Show immutable evidence of behavior

• Prevent compromise at runtime

• Satisfy regulatory scrutiny

• Win customer trust in mission-critical applications

Looking Ahead

Over the next 24–48 months, the most profound shift won’t be in model size—it will be in model assurance. Based on the attached documents:

1. Formal-verified AI becomes standard in high-stakes domains

Harmonic AI already demonstrates this with mathematically guaranteed outputs (pages 4–5).

Expect wide adoption in:

• medicine

• aerospace

• energy systems

• financial decisioning

• defense & intelligence

2. Runtime Zero-Trust becomes mandatory for deployed AI agents

The analysis of TrustedPlatform shows it mitigates supply-chain attacks, rogue agent behavior, and prompt injection through network isolation and cryptographic identity (pages 3–4).

3. Multi-layer assurance becomes the new regulatory expectation

Governments and auditors will increasingly demand:

• Proof of reasoning (formal methods)

• Proof of containment (Zero Trust)

• Proof of integrity (immutable audit)

The era of “trust the model” is ending.

The era of “prove it, then enforce it” is beginning.

The Upshot

Today’s universal adversarial attack exposed a reality long known to researchers: AI without provable correctness or runtime enforcement cannot be trusted.

But the attached documents show that a solution is emerging.

The fusion of Lean4-based formal verification and TrustedPlatform-based Zero Trust containment provides a practical, scalable pathway to trustworthy AI—one capable of withstanding both internal hallucinations and external adversarial manipulation.

This combined approach answers the concerns raised by the AI guardrail-bypass attack:

AI must be both provably correct and operationally constrained.

References

- Formal Methods vs. TrustedPlatform (User-provided analysis).

- Mavani, D. “Lean4: How the Theorem Prover Works and Why It’s the New Competitive Edge in AI.” VentureBeat, Nov 23, 2025.

- Harmonic AI research on Lean4-verified mathematical reasoning (referenced on pages 4–5).

- DARPA analysis of formal methods and their role in eliminating entire classes of software vulnerabilities (summarized in user-provided document).

Leave a comment